CyberAI - Intercontinental Strategic Imperative

AI in cybersecurity presents great opportunities but as with any powerful

general purpose dual use technology , it also brings great challenges. AI can

improve cybersec and defence measures allowing for greater system robustness

, resilience and responsiveness but AI in the form of ML and deep learning will

escalate sophisticated cyberattacks enabling faster and better targeted and

more destructive attacks.

The application of AI in cybersecurity also poses security and ethical concerns.

Among other things it remains unclear to see how responsibilities for

autonomous response systems should be ascribed. How to make sure systems

are behaving according to expectations. Or what the security risks carried by

the increasing anthropomorphisation of AI systems are. ANTHROPOMORPHIC

LANGUAGE AT TIMES APPEARS INTRINSIC TO THE FIELD OF AL RESEARCH

The Organisation for economic cooperation and development (OECD) defines an AI system as

a “machine based system that can for a given set of human defined objectives make

predictions recommendations or decisions influencing real or virtual environments.” This

definition has also been accepted and adopted by the European Commission in the

Regulation on a European approach for AI.

ML is a set of techniques to allow machines to learn in an automated manner through

patterns and inferences rather than through explicit instructions through a human. ML

approaches often teach machines to reach an outcome by showing them many examples of

correct outcomes. However they can also define a set of rules and let the machine learn by

trail and error.

Neural networks involve repeatedly interconnecting thousands or millions of simple

transformations into a larger statistical machine that can learn sophisticated relationships

between inputs and outputs. Neural networks modify there own code to find and optimise

links between inputs and outputs

.

Deep learning is a large neural network subset composed of hierarchical layers that increase

the complexity of the relationships between input and output.

AI in cybersecurity can be identified in 3 categories. - Detection 51% Prediction 34% and response 18%.

Driving forces that are boosting the use of AI in cybersecurity.

1. Speed of impact – in some of the major attacks the average time of impact on the organisation is 4 mins.

Todays attacks are not just ransomware or just targeting certain systems or vulnerabilities they can move

and adjust based on what the targets are doing. These kind of attacks impact incredibly quickly and there

are not many human interactions that can happen in the meantime.

2. Operational complexity. - Today the proliferation of cloud computing platforms and the fact that those

platforms can be operationalised and deliver services quickly in the milliseconds range. Means that you

cannot have a lot of humans in that loop and you have to think about a more analytics driven capability.

3. Skills gap – There is a global shortage of about a million and a half cybersecurity professionals. This level

of scarcity pushes the industry to automate at a faster rate.

AI can help security teams in 3 ways. - By improving systems robustness response and resilience ...The 3R

model.

AI can improve systems robustness that is the ability of a system to maintain its initial

assumed stable configuration even when it processes erroneous inputs. Thanks to self testing

and self healing software. This means that AI systems can be used to improve testing for

robustness delegating to the machines the process of verification and validation.

AI can strengthen systems resilience – the ability of a system to resist and tolerate an attack

by facilitating threat and anomaly detection.

AI can be used to enhance response – the capacity of a system to respond autonomously to

attacks , to identify vulnerabilities in other machines and to operate strategically by deciding

which vulnerability to attack and to launch more aggressive counterattacks.

Identifying when to delegate decision making and response actions to AI and the need of an

individual org to perform risk impact assessment are related. In many cases AI will augment

without replacing the decision making of human security analysts and will be integrated into

the process that accelerate response times.

AI Cyberdefense systems are being implemented to proactively detect and

mitigate threats. Traditional antivirus relies on blacklisting historic threats based

on virus signatures. AI based EDR can recognise aspects of software that may

be malicious without the need to rely on pre defined database.

MAJOR APPLICATION IN -

EDR - USER AUYHENTICATION - NETWORK DETECTION - INTRUSION

DETECTION AND PREVENTION (IDS) - LOG MANAGEMENT AND SIEM.

Behavioural Biometrics identifies users based on unique aspects of their digital

activity - Active authentication systems enhance cybersecurity by ensuring

ongoing user authentication

CYBER AI 4 ZERO TRUST NETWORK ACCESS (ZTNA) - Intelligent dynamic

multi dimensional security approach.

Smart Automation for the complete process. Real time monitoring 2 identify

breaches (Alarm React Respond). Intelligent Behavioural Analytics to identify

patterns - Erratic Actions through combination of user data + location +

user behaviour = Log info 4 future access reference retrieval. If APT breach

identified mechanically protect the centralised data vault.

Behavioural Analytics .

Application of complex algorithms to individual level data to derive

insights , generate forecasts and make predictions about future human

behaviour. Global INTEL Agencies could hypothetically implement AI to

make predictions about future behaviour. Major Application - Insider

Threat Detection (ITD) - Govt & Military etc.

BA is here to stay. BA techniques may not just be effective in refining

assessment of risk but also discovering new leads about evolving cyber

threats landscape. Increasingly sophisticated use of AI and BA can be

deployed to extract information from bulk datasets.

US Pentagon believes that precognitive AI can predict

events 'days in advance' - Global Information

Dominance Experiments (GIDE) - AI + CLOUD

COMPUTING + SENSORS. Mix of military and civilian

sensors to address scenarios where contested

logistics might pose a problem. GIDE tech employs

ML based system to send fast critical updates and

alerts. Can prevent surprise aggression or lead to

negotiations instead of conflict . Humans will still be

heavily involved in the process.

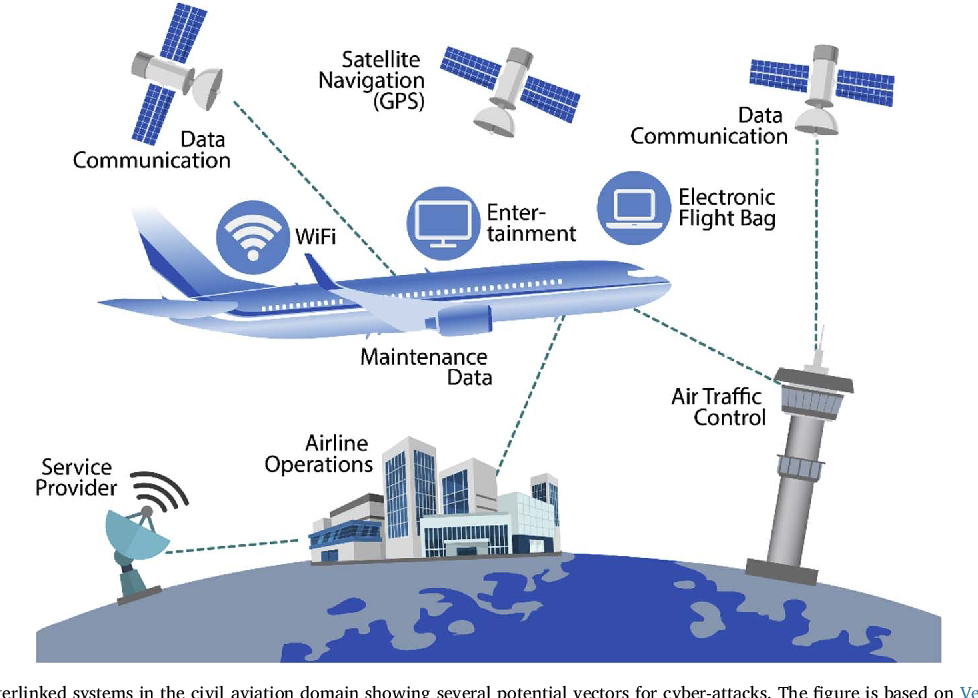

Complex weapons platforms also coordinate

attack or defense among geographically

disparate forces. A CyberAttack on these

weapons platforms can crash the software

used to runthem. That could sideline a

fighter jet or radar that protects a aircraft

carrier battlegroup or cause a Military

satellite to go offline using non kinetic

means - Lasers , radio frequency jammers and Cyberattacks . Satellite navigation systems are also

at risk of jamming and spoofing. Satellites

are also crucial for coordinating electricity

grids and time stamping financial

transactions. Geolocation is susceptible to

electronic interference.

Penetrating communication networks , sabotaging databases

( deleting spare engines for fighter jets from spare parts roster)

and locking legitimate users out from crucial networks. The NSA

wants military industrial complex to take cyber vulnerabilities

seriously. Unlike the kinetic battlefield where aircraft drones and

armored fighting vehicles spend most of their time out of

contact with the enemy - The wireless nature of computer

networks means that those same weapons will be under constant

threat of enemy assault . And that requires a different kind of

armor.

credit - NATIONAL SECURITY AGENCY.

A Supply chain attack is an incredibly expensive operation . Back doors imitate

legimitate email users and fool the electronic systems that are supposed to assure

identities of users with the right login credentials and additional authentication.

Such an attack has the opportunity to hit a massive quantity of targets but if

reached to far the APT would lose their incredible access - FireEye

Complex Weapon systems and platforms are

vulnerable to Cyber Attack - Weapon Systems don't

just include hypersonic missiles or lasers. There are

computers at wings , at sea and on land. We don't

think of weapons systems that way but none of them

works without computers.These computers add

functionality , including fire control ( correcting and

adjusting aim against distant or fast moving

targets ), navigation,(receiving AGPS signals) and

communications (voice and data)

UN Appointed independent rights experts - AI Powered spyware &

disinformation is on the rise & regulation of Space has become

urgent.AI based biometric surveillance systems are increasingly being

used in sensitive contexts without individuals knowledge or consent.

Urgent & strict regulatory red lines are needed for technologies that

claim to perform emotion or gender recognition. The Human Rights

Council appointed experts condemned the already alarming use and

impacts of spyware and surveillance technologies on the work of

human rights defenders and journalists often under the guise of

National security and counter terrorism measures

Human Rights Council also called for regulation to address the

lightning fast development of generative AI that's enabling mass

production of fake online content which spreads disinformation

and hate speech. Encryption and Privacy are paramount. They

underlined the need to ensure technical solutions - including

strong end to end encryption and unfettered access to VPNs -

and secure and protect digital communications.

"Adversarial AI" - A Threat to military systems - Shift5's

Lospinoso says - The former Army Captain told a Senate Armed

Services panel how AI can impact Military Operations.

Cyber Intelligent Attacks .

1. AI driven Malware - Artificially intelligent malware can infect devices faster than ever before becoming harder to detect targeting more victims and creating more convincing phishing attacks.

2. Vulnerable Applications - Weak application security creates an entry point for APT to infiltrate computer systems. AI can be used to hide malicious code within applications , sometimes programming the attack to execute well after the app has been installed Malicious codes can be present for years before they strike. Data Obfuscation - An image is worth a thousand lines of malware. Malware hidden in images developed by AI can only be detected by AI.

3. Expanded attack surface - Hybrid work and cloud access in personal devices widens the surface are for cyberattacks. AI creates an even more involved that landscape expanding what devices and machines can be used to infiltrate systems.

4. Constant evolution - AI is always evolving. While the benefits include enhanced threat detection. APT are constantly learning from advanced AI tools to develop more advanced attacks and improve their malware. The dawn of Advanced Intelligent Polymorphic cyber attacks is here.

Therefore it's about time to intelligently automate endpoint security , email security , Application security , identity and access management.

Thanks to

1. NORTH ATLANTIC TREATY ORGANISATION.

2. UN APPOINTED INDEPENDENT RIGHTS EXPERTS.

3. INTELLIGENCE AND NATIONAL SECURITY ALLIANCE.

4. NATIONAL SECURITY AGENCY.

5. CYBERSECURITY AND INFRASTRUCTURE SECURITY AGENCY.

6. INFORMATION SYSTEMS AND SECURITY ASSOCIATION INTERNATIONAL.

7. EUROPEAN DEFENCE AGENCY.

8. EUROPEAN PARLIAMENTARY RESEARCH SERVICE.

1PowerCyber @ easytech4all.net

https://1powercyber.substack.com

https://1powercyber.blogspot.com

https://easytech4all.tumblr.com

https://linktr.ee/1powercyber

Comments

Post a Comment